INSIDE AI #14: New Report with Insider Quotes, Meta Hires, OpenAI Insider Risk, Chip Smuggling & Whistleblowing, Comments on Whistleblowing in RAISE Act and California Report on Frontier AI Policy

Edition 14

In This Edition:

Key takeaways:

New Publication on AI Whistleblowing to which we contributed some first quotes from our anonymous insider survey

Insider Currents

Meta's Superintelligence Push: Zuckerberg's Personal Recruitment Drive

OpenAI Changes Up ‘Insider Risk’ Team (And What is an ‘Insider Risk’ Team, Anyways?)

Whistleblower Program to Combat AI Chip Smuggling & Recent Report by CNAS

Policy - Our Comments on…

RAISE Act has Passed New York Assembly - Whistleblower Protections Removed in Last Iteration (But Not All is Lost)

Joint California Working Group Recommends Whistleblower Protections

Publication Announcement: Whistleblower Protections for AI Employees

We are happy to share the public release of a report written by

, , CARMA, with contribution from us. In a nutshell:Unlike other high-risk industries like aviation or nuclear energy, AI has no dedicated federal whistleblower protections for employees who witness dangerous practices.

This protection gap is particularly dangerous because AI companies operate with secrecy while government agencies lack the technical expertise to effectively oversee them. Current protections are inadequate. AI employees must either rely on patchy state laws or try to frame safety concerns as securities fraud under existing financial regulations.

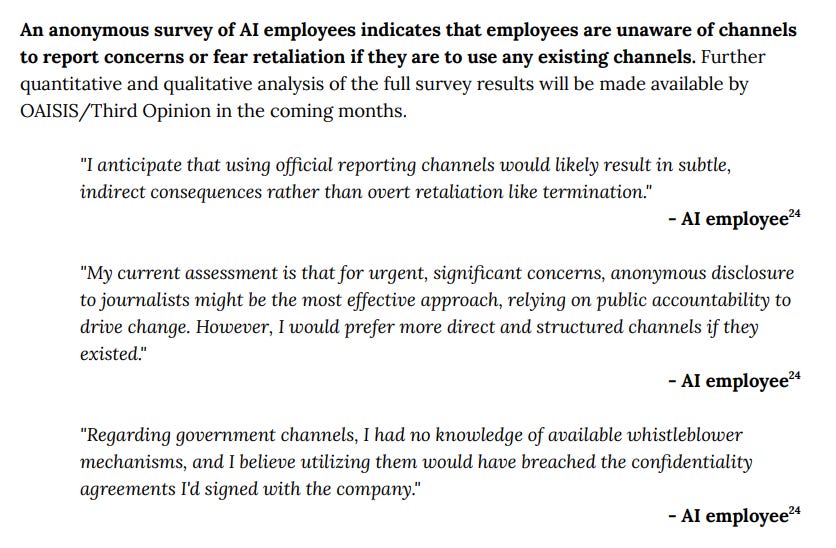

We provided some quotes from our ongoing anonymous survey with individuals working at frontier AI companies (we are looking for more respondents, if you can think of someone!) to demonstrate how this ‘gap’ feels on the inside:

Quotes are rephrased.

The report recommends comprehensive federal legislation that would protect AI workers, including contractors and advisors, from retaliation when reporting public safety risks, not just illegal activity. Key provisions should include making restrictive NDAs unenforceable for safety disclosures, allowing employees to share trade secrets with government oversight bodies, and ensuring fast access to federal courts. Without these protections, the information asymmetry between AI companies and regulators will persist, potentially hiding risks until it's too late to prevent catastrophic outcomes.

Insider Currents

Carefully curated summaries and links to the latest news, spotlighting the voices and concerns emerging from within AI labs.

We’re testing a different structure - featuring a larger link collection and fewer in-depth insider current articles. Let us know what you think.

Meta's Superintelligence Push: Zuckerberg's Personal Recruitment Drive:

According to Bloomberg sources, Mark Zuckerberg is personally assembling a secretive "superintelligence team" after growing frustrated with Meta's AI shortfalls. The CEO has rearranged desks at Meta's Menlo Park headquarters so approximately 50 new hires will sit near him, and created a WhatsApp group called "Recruiting Party" where executives discuss potential targets around the clock. Bloomberg reports that Zuckerberg handles initial outreach himself and stays in regular contact throughout hiring processes, pitching recruits during lunches and dinners at his California homes.

The recruitment drive follows disappointment with Llama 4's performance in April and the delayed launch of Meta's "Behemoth" model, which leadership determined didn't sufficiently advance on previous versions. According to people familiar with the matter, AI-focused staff have been working nights and weekends under pressure to meet Zuckerberg's year-end goals for the best AI offering in terms of usage and performance. The push includes Meta's $14.3 billion investment in Scale AI, bringing founder Alexandr Wang to lead the new team.

Meta's aggressive talent acquisition has extended to attempting a $100 million signing bonus offer to OpenAI employees, according to CEO Sam Altman on the Uncapped podcast. "I've heard that Meta thinks of us as their biggest competitor," Altman said, though he claimed none of OpenAI's top talent accepted the offers. CNBC reports that after failing to acquire Ilya Sutskever's $32 billion startup Safe Superintelligence, Meta moved to hire its CEO Daniel Gross and former GitHub CEO Nat Friedman, while taking a stake in their venture fund. The talent war reflects broader concerns about Meta's AI trajectory, with internal doubts about product direction and continued high-level departures from the company's AI units.

→ Read the Bloomberg Article

→ Read the Reuters Article

→ Read CNBC Article

OpenAI Changes Up ‘Insider Risk’ Team:

According to sources familiar with the matter, OpenAI has laid off some members of its insider risk team and plans to revamp the unit as the company faces new internal security threats. The company confirmed the changes, stating it aims to restructure the team to address evolving risks as OpenAI has grown and expanded its vendor relationships.

The restructuring reflects growing industry concerns about protecting AI model weights from internal threats. Anthropic recently implemented "ASL-3 Security Standards" with "more than 100 different security controls" for its Claude Opus 4 release, though the company acknowledged that "sophisticated insider risk" remains beyond current protections. These specialized teams focus on threats from employees or contractors who could steal company assets, distinct from general AI safety work. OpenAI's job postings describe roles involving detecting internal threats and monitoring for "foreign government involvement in IP theft," stating the company sought investigators "as a part of our commitment to the White House."

→ Read The Information Article

→ Archived OpenAI Job Listing for Context on Insider Risk Team, Related Announcement

→ Anthropic ASL-3 Announcements incl. Context on Insider Threats, Discussion on LessWrong

Assorted Links

OpenAI

OpenAI Seeks New Financial Concessions From Microsoft, a Top Shareholder

→ Read the full articleOpenAI Employees Have Cashed Out $3 Billion in Shares

→ Read the full articleOpenAI Has Discussed Raising Money From Saudi Arabia, Indian Investors

→ Read the full article

Google

Google Reportedly Plans to Cut Ties with Scale AI After Meta Deal

→ Read the full articleGoogle Offers Buyouts to Employees in Search and Ads, Other Units

→ Read the full articleDocuments reveal what tool Google used to try to beat ChatGPT: ChatGPT itself

Meta

Meta Agreed to Pay up for Scale AI but Then Wanted More for Its Money

→ Read the full article

Recent Research Highlights

Find here relevant research in the context of AI whistleblowing.

Whistleblower Program to Combat AI Chip Smuggling & Recent Report by CNAS

According to Senators Mike Rounds (R-S.D.) and Mark Warner (D-Va.), who introduced the "Stop Stealing our Chips Act" in April 2025, a whistleblower incentive program could address systematic failures in enforcing U.S. export controls on AI chips. The proposed legislation would provide whistleblowers with 10 to 30 percent of collected fines from export control violations, modeled after the SEC's program that has awarded over $2.2 billion to whistleblowers since 2010 and collected penalties estimated between $7 billion and $22 billion.

The enforcement gap is substantial. A June 2025 report from the Center for a New American Security estimates that between 10,000 and several hundred thousand AI chips may have been smuggled into China in 2024, with a median estimate of approximately 140,000 chips. Six news outlets have independently documented large-scale smuggling operations, including cases worth $120 million for 2,400 NVIDIA H100 chips and another worth $103 million, according to The Information. Singapore authorities arrested three individuals in March 2025 suspected of diverting AI servers worth $390 million. Of the 22 notable AI models developed exclusively in China by early 2025, only two were trained with domestic chips.

The Bureau of Industry and Security, which administers export controls, faces severe resource constraints despite expanded responsibilities. A single export control officer currently handles all of Southeast Asia and Australasia, while the agency's budget has remained essentially flat for over a decade when adjusted for inflation. The proposed self-funding whistleblower program could provide resources while creating financial incentives for insiders to expose smuggling networks, potentially making these operations significantly riskier for violators.

→ Read the June CNAS Report on Countering AI Chip Smuggling as a National Security Priortiy

→ Read The Information Article

→ Read the Reuters Report on $390 million server fraud case

→ Read the Whistleblowing Programme Legislation Proposal

→ Read the Foundation For American Innovation Article

Policy & Legal Updates

Updates on regulations with a focus on safeguarding individuals who voice concerns.

RAISE Act has Passed New York Assembly - Whistleblower Protections Removed in Last Iteration (But Not All is Lost)

New York State’s AI Transparency Bill, the “RAISE” Act, has passed the New York Assmebly late last week, pending Governor Kathy Hochul’s signature to enter into force. We won’t repeat the core contents here, Zvi and CSAI wrote good summaries, which you can find below.

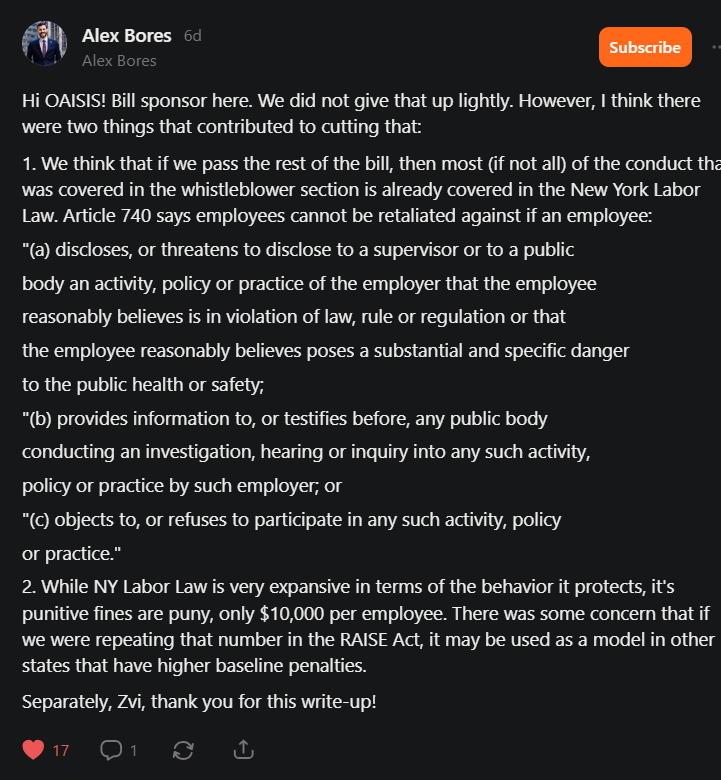

Version A of the bill, submitted on June 3, still included explicit whistleblower protections (Section 1422). The revised version, introduced on June 9 and passed on June 12, no longer included these provisions.

What is the impact of this whistleblowing section being cut?

It is not too dramatic. Section 740 of the New York Labor Law already contains whistleblower provisions, protecting against retaliation…

Current and Former Employees and Contractors who are natural persons…

…disclosing or threatening to disclose to a supervisor or public body…

…suspected breaches of the law or…

…behaviour posing '“substantial and specific danger to the public health or safety” (both based on ‘reasonable belief’)

So what would the RAISE Act have improved upon?

Largely, the RAISE Act repeated the provisions of Section 740. It would’ve improved on it by:

Extension of protected person to non-paid contractors/ advisors/ as well as non-natural persons being protected (e.g. Eval Providers) - this is the (essentially) only and largest expansion on the existing Section 740.

Slightly improved language around “unreasonable or substantial risk of critical harm” vs. “substantial and specific to the public health or safety”. However, any “unreasonable or substantial risk of critical harm” is extremely likely to be covered under Section 740 provisions already, meaning that in practice there would likely have been little to no extensions here.

This is of course separate from other limitations of Section 740 (e.g. insufficient burden of proof reversal, limited fines, or no allowance for public disclosure in the case of imminent threat), which lawmakers could’ve attempted to allow for in RAISE.

We had voiced the former sentiment in the comments of The Zvi’s Post - with

taking the time to respond on their reasoning for making the changes (link to comment). We especially find the reasoning around not cementing the USD 10,000 penalty, which we agree is far too low, convincing.Joint California Working Group Recommends Whistleblower Protections

Following Gavin Newsom’s Veto of SB 1047, the Joint California Working Group has concluded its overview of policy recommendations for regulating AI.

A critical element of their recommendations is transparency, following a ‘trust but verify’ approach. They recognize whistleblower protections as a core verification and enforcement method.

It is very good to see the working group seeing these protections as important, and certain elements of their recommendations do reflect international best practices: For example,

Protecting reports that go beyond violations of the “letter of the law” as the exact shape that severe risks might take might be difficult to codify.

Requiring only ‘reasonable cause’ or ‘good faith’ around disclosures to unlock protections rather than definite proof.

We however believe the working group could have extended their comparison of global standards, and accompanying recommendations, quite significantly - although we assume the Working Group was to an extent limited by California law. We will share a longer piece on this in the coming days. In a nutshell, just the EU Whistleblowing Directive is significantly more expansive than described in the report, in areas of…

Personal Scope (protecting far more individuals than just employees and contractors)

Public disclosure rights for imminent threats

Retaliation protections

Timelines for handling and responding to reports

Independence requirements for internal channels

Training & Transparency requirements for covered persons and company “internal” whistleblowing channels

The Report also did not mention e.g. expanding fines for violations (currently low in California) or the importance of equipping regulator recipient bodies with the right resources to actually handle cases.

Overall, we still commend the working group providing for highlighting whistleblower protections in such a prominent fashion.

Thank you for trusting OAISIS as your source for insights on protecting and empowering insiders who raise concerns within AI labs.

Your feedback is crucial to our mission. We invite you to share any thoughts, questions, or suggestions for future topics so that we can collaboratively enhance our understanding of the challenges and risks faced by those within AI labs. Together, we can continue to amplify and safeguard the voices of those working within AI labs who courageously address the challenges and risks they encounter.

If you found this newsletter valuable, please consider sharing it with colleagues or peers who are equally invested in shaping a safe and ethical future for AI.

Until next time,

The OAISIS Team